Generalization Analysis of Asynchronous SGD Variants

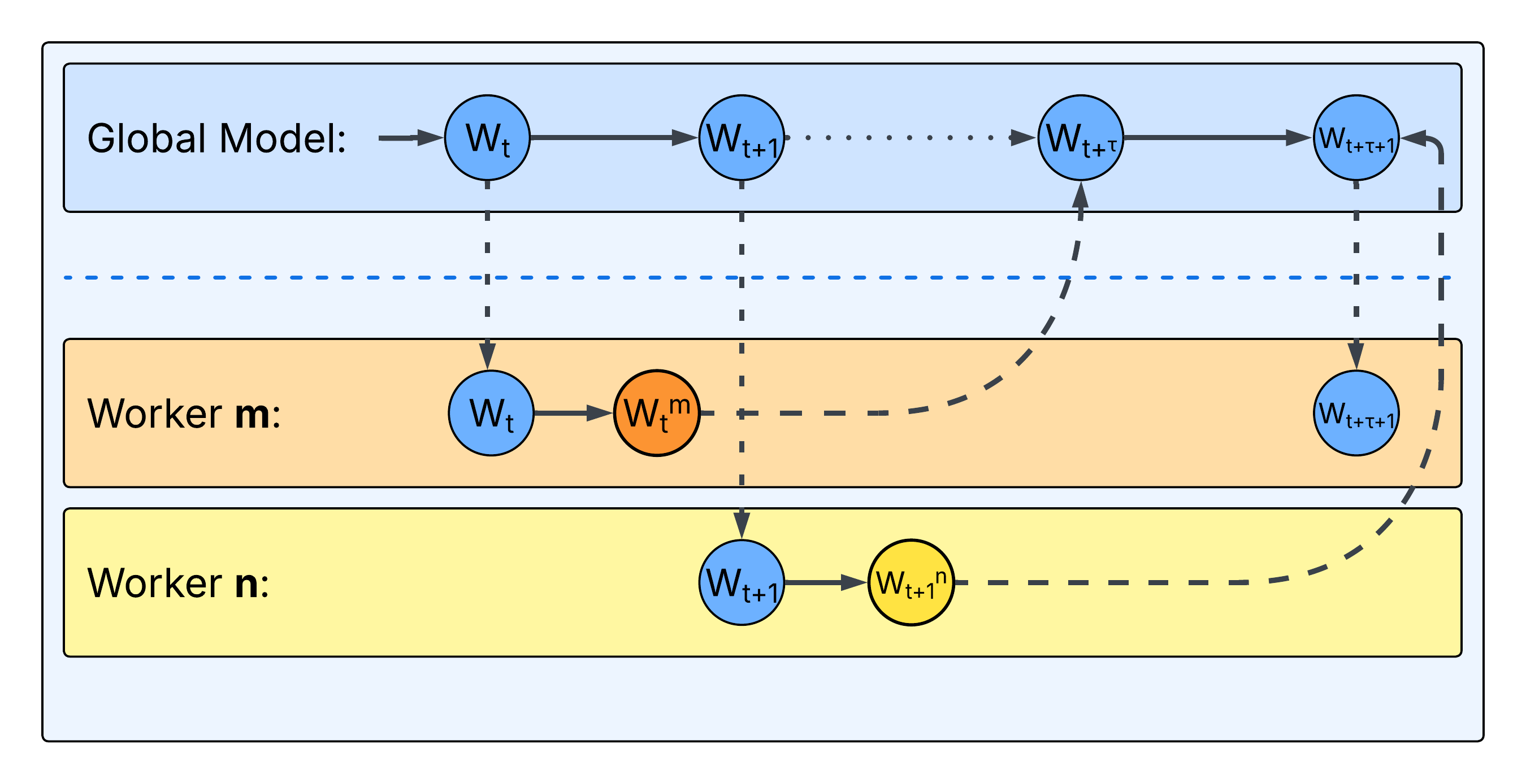

Asynchronous Stochastic Gradient Descent (ASGD) improves training efficiency by enabling parallel workers to update model parameters asynchronously, which introduces staleness in the updates.

While convergence of ASGD algorithms is well established, their impact on generalization is less explored.

Our study shows that Asynchronous SGD methods achieve comparable convergence and equal or better generalization than standard SGD despite staleness.

Project Report

Project Repository

Code Documentation

- src.core package

- src.models package

- src.data package

- src.experiments package

- src.config module

- src.run_tests module

GitHub Repository

GitHub Repository